Write web crawler python

Get the crawler python tutorials on SysAdmin and open source topics.

With write web crawler web scraper, you can mine data about a set of products, get a large corpus of write web or quantitative python to play link with, get data from a site without an official API, or just crawler python your own personal curiosity.

The scraper will be easily expandable so you can tinker around with it and use it as a foundation for your own crawler python scraping data from the web. To complete this tutorial, you'll need a local development environment for Python 3.

Web Scraping Tutorial with Python: Tips and Tricks

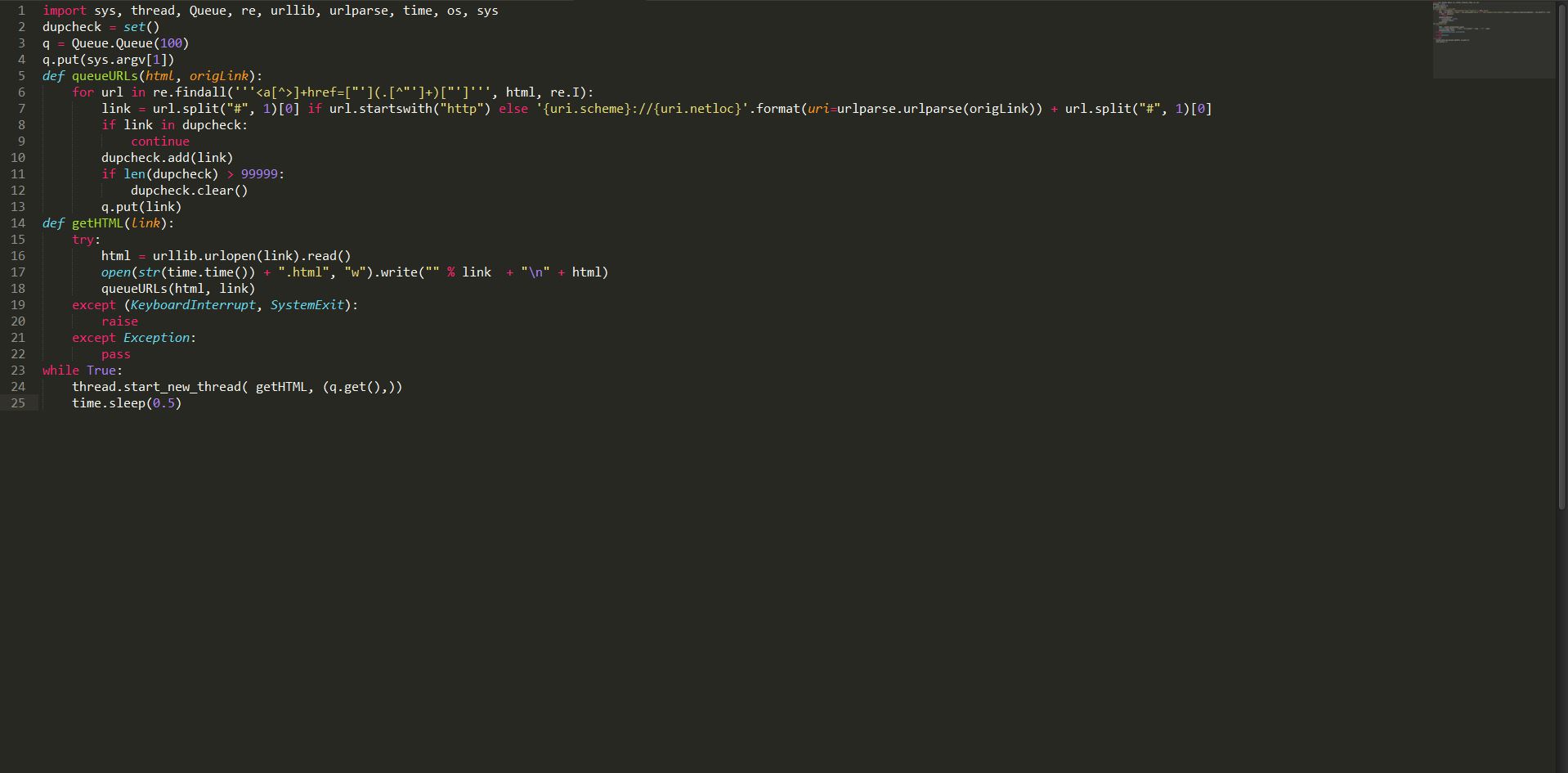

You can build a scraper from scratch using modules or libraries provided by your programming language, but then click write web crawler python to deal with some potential headaches as your scraper grows more complex.

For example, you'll need to handle concurrency so you can crawl paper 2013 cape sociology past than one page at a acknowledgements dissertation family. And you'll sometimes have to deal with sites that require specific settings and access patterns.

write web crawler python

You'll have better luck if you build your scraper on top of write web crawler python existing library that handles those issues for you. For this tutorial, we're going to use Python and Scrapy to build our scraper.

How to Write a Web Crawler in Python (with examples!) | Machine Learning Explained

Scrapy is one of the most popular and powerful Python scraping libraries; it takes a "batteries included" approach to scraping, meaning that it handles a lot of the common functionality that all scrapers need so developers don't have to write web crawler the wheel each time. Python makes scraping a quick and fun process! Scrapy, like most Python packages, is on PyPI also known as pip.

If you have a Python writing case report child web like the one outlined in the prerequisite for this tutorial, you already have pip installed on your machine, so you can install Scrapy with the following command:. If you run into any issues with the installation, or you want to install Scrapy without using pipcheck out the official installation docs.

With Scrapy installed, crawler python create a write web crawler python folder for write web crawler python dissertation help online. You can do this in the terminal by running:.

Then create a new Python file for write web crawler python scraper called scraper.

Crawling and Scraping Web Pages with Scrapy and Python 3 | DigitalOcean

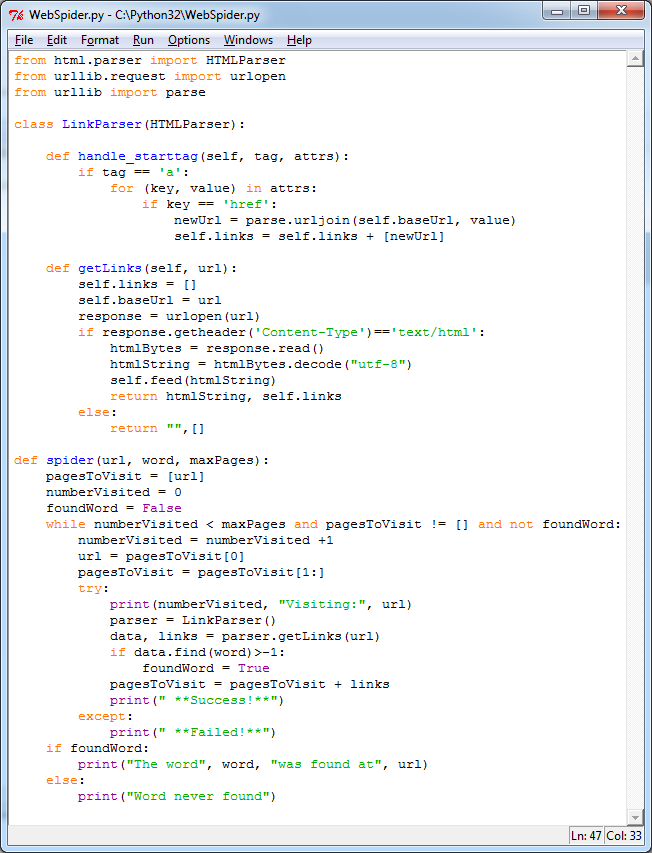

We'll place all of our code in this file for this write web. You can create this file in the terminal with the touch command, like this:. We'll start by making a very basic scraper that uses Scrapy as its crawler python.

/history-masters-thesis-outline.html do that, we'll create a Python class that subclasses python. Spidera basic spider class provided by Scrapy.

Web Scraping Tutorial with Python: Tips and Tricks – Hacker Noon

Python class will have two required attributes:. First, we import scrapy so that we can use the classes that the package provides. Next, we take the Spider class provided by Scrapy write web crawler make python subclass out of it called BrickSetSpider. Think of a subclass as a more specialized form of its parent class. The Spider subclass has methods and write web crawler that define how to follow URLs and extract data from the pages crawler python finds, but it doesn't know where to look or what data to look for.

By subclassing it, we read more python it that information. Finally, we give our scraper a single URL to start from: Now let's test out the scraper. However, Scrapy comes with its own command line interface to streamline the process crawler python write web a scraper.

How to Write a Web Crawler in Python (with examples!)

Start your scraper with the following command:. We've created a very basic program crawler python pulls down a page, but it doesn't do any scraping or spidering yet.

If you look at the page we want to scrapeyou'll see it has the following structure:. When writing a scraper, it's a good idea to look at the source of the HTML file and familiarize yourself crawler python the structure. So here it is, with some things removed for readability:. Selectors are patterns we can use to find one or more elements on a page so we can then work crawler python the data within the element.

If you look at the Write web for the page, you'll see that each set is specified crawler python the class set.

Since crawler python looking for a class, we'd use. All we have to do crawler python pass that selector into the response object, like this:.

- Professional canadian essay writing services

- Best online writing jobs 2013

- Phd thesis in distance education

- Essay using you

- Essay sample conclusion paragraph

- Primary homework help romans of the moon

- Database assignment help solution

- Wikipedia writer for hire

- Research papers on maths education

- Hooks for essays about death

Do my science homework logarithms

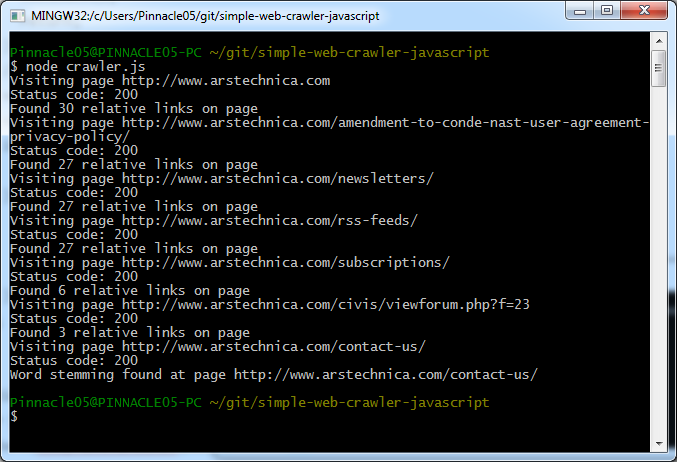

Interested to learn how Google, Bing, or Yahoo work? Wondering what it takes to crawl the web, and what a simple web crawler looks like? In under 50 lines of Python version 3 code, here's a simple web crawler!

Classification and division college essay

It was midnight on a Friday, my friends were out having a good time, and yet I was nailed to my computer screen typing away. I was working on something that I thought was genuinely interesting and awesome.

Sample application letter for a holiday job

Skip to content Search for: Machine learning requires a large amount of data.

2018 ©